Error 406

[T𰁆𰀡𰁶 F𰀁𰅴𰀩IS𰄢]

𰄲O𰆆 A𰀩𰀩𰁒𰅡TA𰀐L𰁉

We were impressed by the inventiveness, urgency, and insightfulness of the applications we received in response to our first open call, Error 406 [Tech Fascism] Not Acceptable. The proposals explored powerful forms of resistance, refusal, and subversion: from instructional approaches and how-tos to interventions and collective practices that push back against tech fascism. It is inspiring to see so many projects challenge existing systems in very specific and diverse contexts using strategies like misdirection, slowing down, opting out, and collective reimagination, while building toward technologies that serve shared needs rather than exploit them.

We received an overwhelming number of 430 submissions from around the world, and are happy to announce the selection of 13 projects by artists and collectives based in Austria, Brazil, France, Georgia, Germany, Mexico, Rojava, Turkey, Taiwan, the UK, and the USA. These projects, selected by our jury Hito Steyerl, Nora O’Murchú, and Sam Lavigne, will receive a total of €50,866 in support and will be featured in an online exhibition in winter 2025/26.

While we regret that we are only able to support a fraction of the many outstanding proposed projects, it is heartening to see how many artists are already actively engaging with these critical questions. We sincerely thank everyone who applied!

Selected Projects

Jury & Selection Criteria

Read more about the selection criteria and the jury consisting of Hito Steyerl, Nora O’Murchú, and Sam Lavigne.

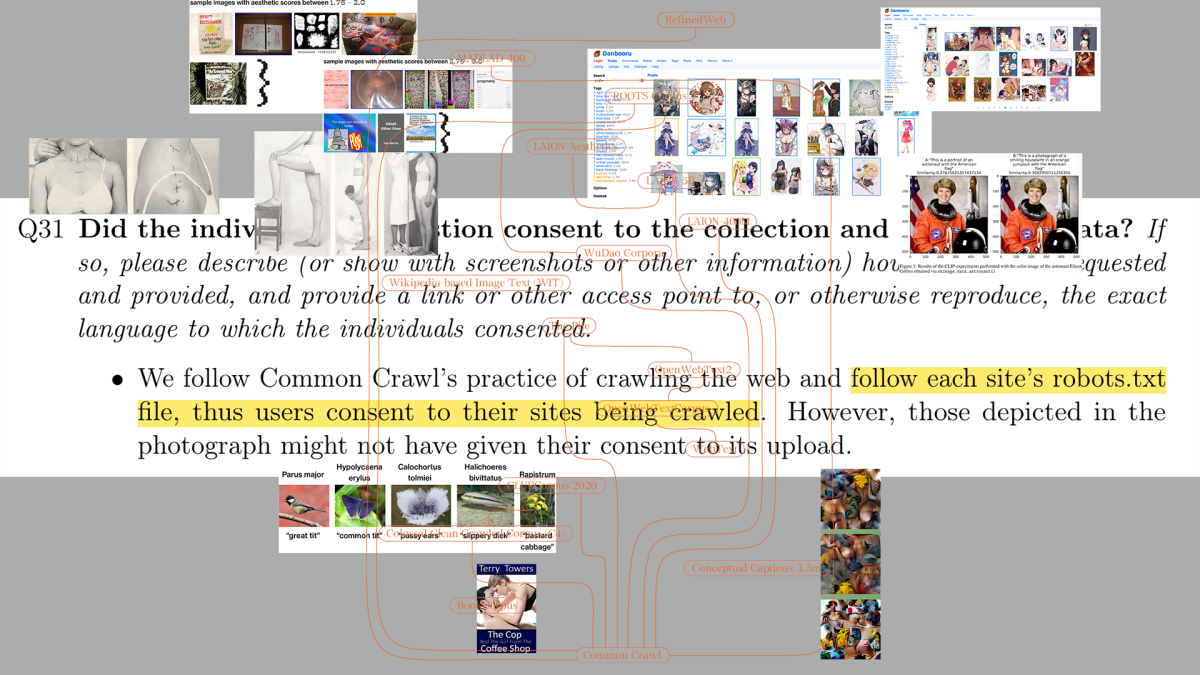

Tech Fascism Not Acceptable

From surveillance systems and algorithmic decision-making to the emerging influence of AI, authoritarian technologies are not just tools — they are systems of control, exclusion, and manipulation that are deeply embedded in the very systems we interact with daily.

We must therefore ask: How does tech fascism affect us? How can we refuse, intervene in, or sabotage fascist systems? How can practices of civil disobedience be shared and brought to the mainstream? What role can art play in these acts of resistance?

Artists, curators, and collectives worldwide were invited to apply with project ideas, instructions, how-tos, interventions and practices that engage with the contemporary condition.

Essays

To contextualize the call's topic, the foundation commissioned two essays by cultural and media theorists Ana Teixeira Pinto and tante.